I need to send a 16-bit value to my GLSL pixel shader, and am expecting it to show up as a Normalized value between 0…1. But then I should be able to multiply it by 65535 to convert it back to the integer format that I desire.

I’m having bad luck with this. I’m not sure what the Pixel Buffer format should be for this Texture.

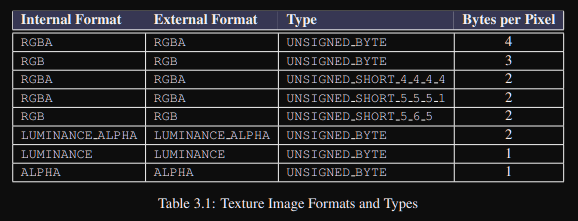

I’m setting Pixel Format to 6406 (Alpha), and 6410 (LuminanceAlpha), 6409 Luminance to try and get something to work.

But nothing seems to be working or even making sense.

For HLSL, I use format R16G16, and it works as expected. The texture “r” value is actually a 16-bit float, and the ‘g’ value is also a 16-bit float. I write my values to it as “ushort” (16-bit int’s), and then in the shader am able to convert them back to the ‘int’ value by multiplying by 65535. It works like a charm.

But for OpenGL - I can seem to get these other formats to make any sense. If I can’t get this working, I’ll have to resort to using RGBA format, and combining two-channels together like this:

int value = (color.r * 65280.0) + (color.g * 255.0);

===

For one shader we only need ONE 16-bit value, and would prefer to have the texture be 16bpp, not 32bpp, to save on RAM.

OpenGL - seems to be our problem-child.