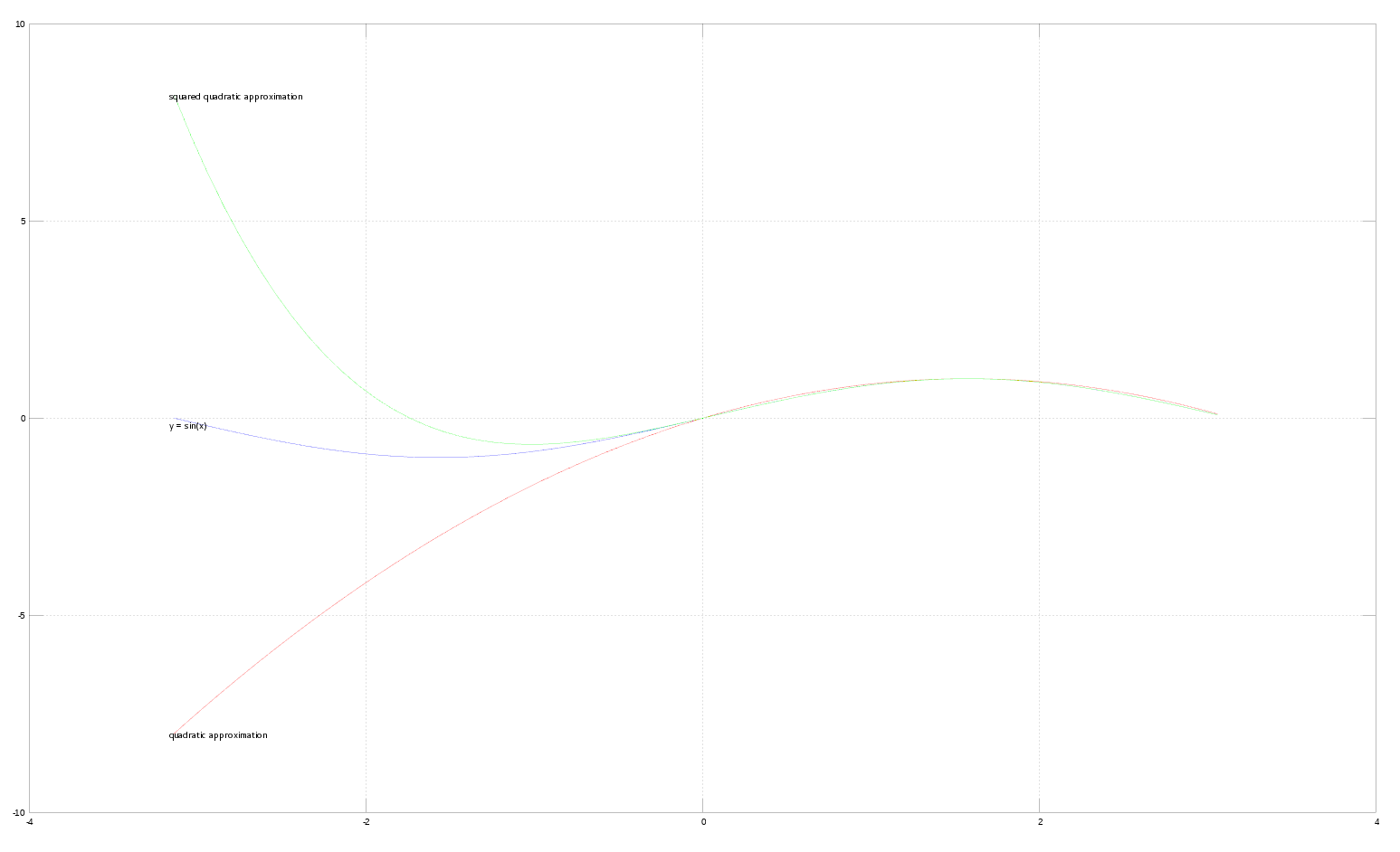

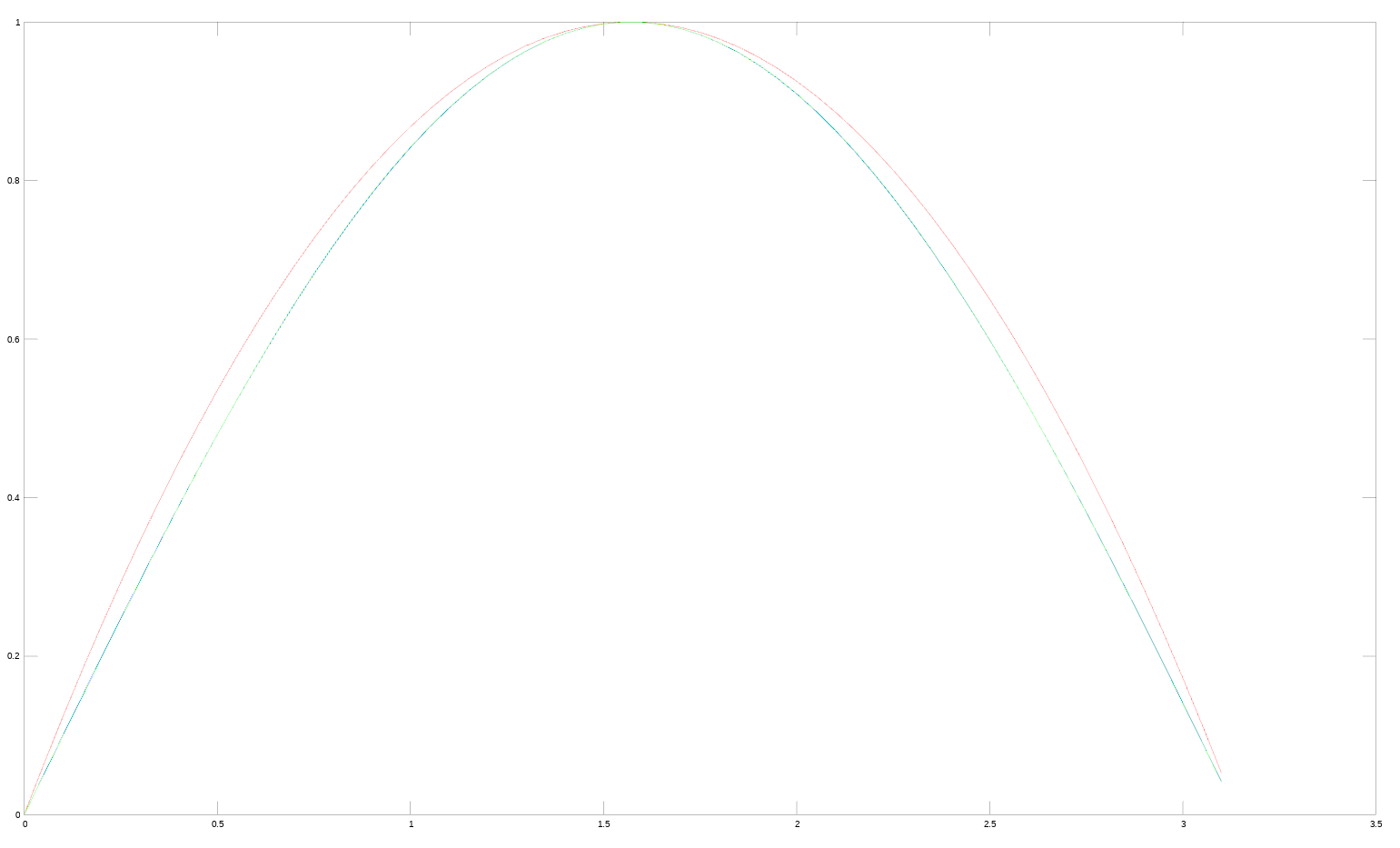

To get fast sines I use a Vector of floats as a look-up table. It is generated when the program starts - but this should be possible during compile time - and after that you get pretty accurate (depending on the resolution) and fast results. Quarter of a period would be enough to generate since this data could be mirrored to fill in the rest.

Would this be a good way to have fast sines built into Urho? Since sines are pretty versatile and useful for game world control.

[quote=“damu”]I also thought of using a look-up table with a resolution of for example 1 degree. It would have to be interpolated. I have the feeling it would be slower as one of the variants from/based on Michaels work but it is worth to benchmark and to compare the precision. With caching it might actually be faster.

But it would be also an approximation (and require fmod) which cadaver might not approve?

In general a speedup there and maybe in other places would be good of course. It’s a design decision and I also thought of the possibility of making such faster but more inaccurate versions optional via Urho CMake options. That may not be that important for this case but there might be other places where an inaccurate approximation makes a higher performance impact which might be desired.

Oh I also saw a talk (here: youtu.be/Nsf2_Au6KxU?t=41m40s) by a Valve developer who worked at “Left for Dead” and he showed a fast and approximated sincos using SIMD, so it seems that Valve uses such things. I also heard of Unreal having various fast approximations.[/quote]

and fills it with a quarter of a sine function (ranging from 0? to 84.375?).

and fills it with a quarter of a sine function (ranging from 0? to 84.375?).