BTW I definitely had a CMake stale build issue on this today, on my cheesiest Intel integrated GPU laptop. Pulled what I thought was new Urho3D, built and installed it but not cleanly. Ended up with a log full of errors about read-only depth stencil. Knew that was impossible for a recent build because my other machine had those demoted to warnings only and rendered fine. Nuked my Urho3D build and install directories, nuked UrhoSampleProject build directory, built everything from scratch. Now everything works fine.

Moral of the story is CMake can only handle so much “automatic” adjustment of the build. I think, especially if the CMake build itself changes much, it’ll eventually just barf. That’s why one does out-of-source builds, so it’s easy to nuke the build and start over clean.

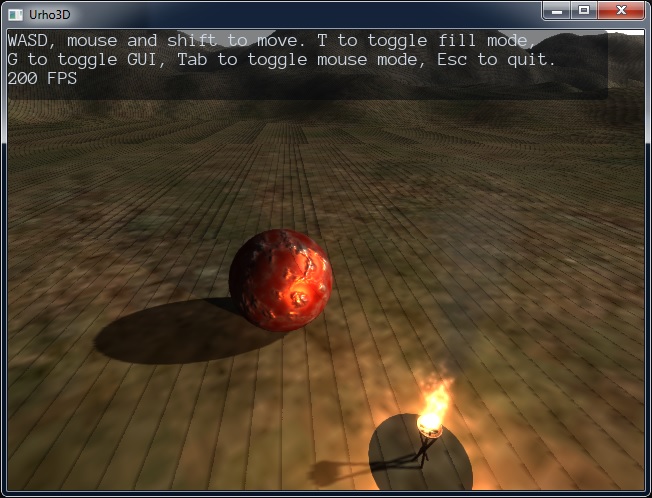

I’m surprised that both the AMD and Intel integrated GPUs are running at 10 FPS, whether in Debug or Release. The AMD part has been consistently 2x faster than the Intel on other tests, like Unigine Valley. I suppose it’s possible that Urho3D’s shadowing exercises GPU capabilities that are equally crappy on both though. I will drag out my bigger laptop that has a dedicated NVIDIA GeForce 8600M GT in it, to see if it runs any faster. All 3 machines have roughly similar CPU-side capabilities.

GeForce does 15+ FPS in Debug and Release. So, faster, but not fast. I know this is old HW but I had hoped for better on seemingly such a trivial demo. I do recall various DirectX SDK demos that choked the crap out of that card back in the day though. Now I suppose I can learn what all my cheesy old HW can and cannot do.

I don’t know if it’s related to the performance, but this is all DX10 or DX10.1 class HW, running a DX11 API on top of it. This says read-only depth-stencil views were introduced in DX11, so my HW may not be able to support simultaneous writeable and read-only views.

[quote]

4. Read-only depth-stencil views: D3D10 let you bind depth-stencil buffers as shader resource views so that you could sample them in the pixel shader, but came with the restriction that you couldn?t have them simultaneously bound to the pipeline as both views simultaneously. That?s fine for SSAO or depth of field, but not so fine if you want to do deferred rendering and use depth-stencil culling. D3D10.1 added the ability to let you copy depth buffers asynchronously using the GPU, but that?s still not optimal. D3D11 finally makes everything right in the world by letting you create depth-stencil views with a read-only flag. So basically you can just create two DSV?s for your depth buffer, and switch to the read-only one when you want to do depth readback.[/quote]