[quote=“godan”]Looks good! Nice one.

What was your approach? This is sort of ui is something I have to tackle at some point as well, so I’d be happy to help out with the effort.[/quote]

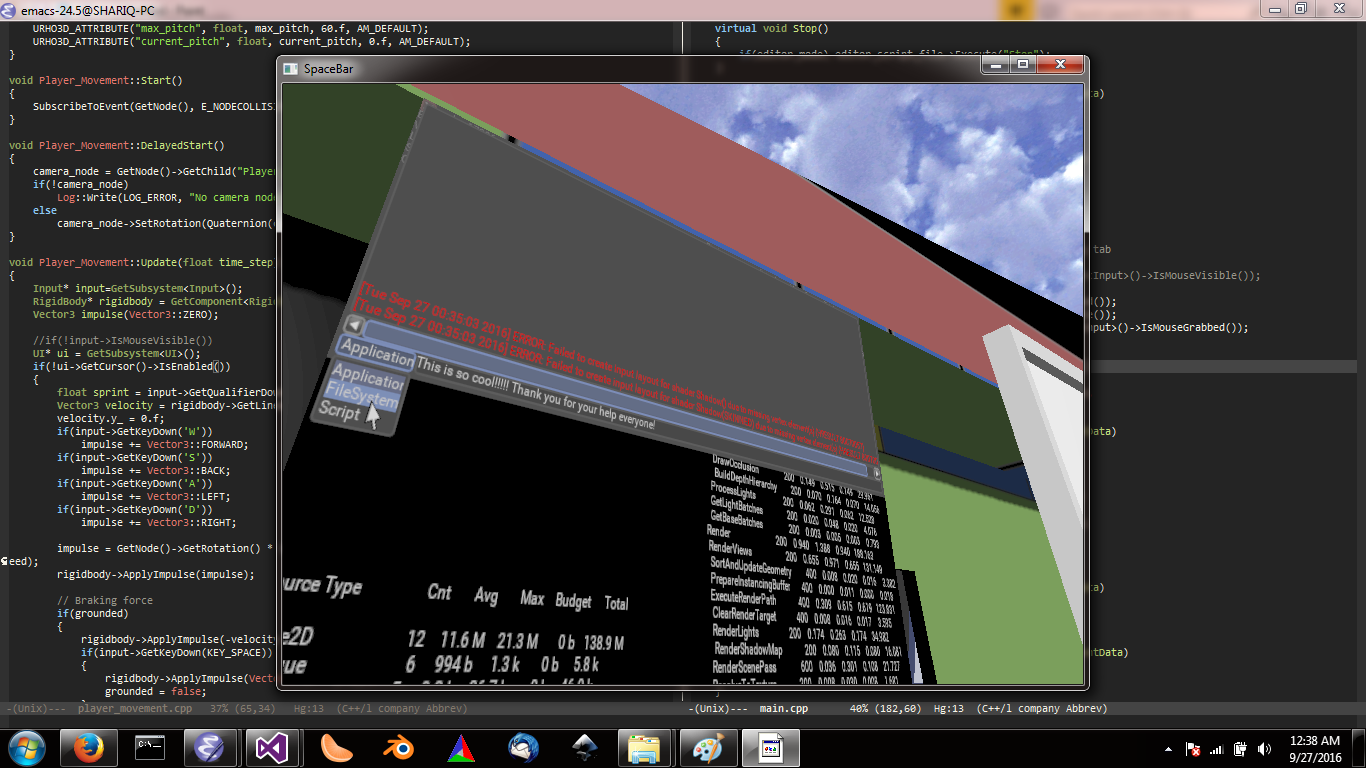

I used a simple renderpath on a viewport without a camera or scene. Here’s the renderpath:

<renderpath>

<command type="clear" color="0 0 0 1" depth="1.0" stencil="0" />

<command type="renderui" output="ui_texture" />

</renderpath>

The code is pretty simple as well, this is on a logic component with a plane attached to it

void Screen_Component::Start()

{

ResourceCache* cache = GetSubsystem<ResourceCache>();

Context* context = GetContext();

SharedPtr<Texture2D> render_texture(new Texture2D(context));

render_texture->SetSize(800, 600, Graphics::GetRGBAFormat(), TEXTURE_RENDERTARGET);

render_texture->SetFilterMode(FILTER_BILINEAR);

render_texture->SetName("ui_texture");

cache->AddManualResource(render_texture);

SharedPtr<Material> material(new Material(context));

material->SetTechnique(0, cache->GetResource<Technique>("Techniques/DiffSpec.xml"));

material->SetTexture(TU_DIFFUSE, render_texture);

StaticModel* model = GetComponent<StaticModel>();

model->SetMaterial(material);

RenderSurface* surface = render_texture->GetRenderSurface();

SharedPtr<Viewport> ui_viewport(new Viewport(context));

XMLFile* ui_renderpath = cache->GetResource<XMLFile>("RenderPaths/UI_Render.xml");

ui_viewport->SetRenderPath(ui_renderpath);

ui_viewport->SetRect(IntRect(0, 0, 0, 0));

surface->SetViewport(0, ui_viewport);

UI* ui = GetSubsystem<UI>();

Cursor* cursor = new Cursor(context);

Image* image = cache->GetResource<Image>("Textures/UI.png");

if (image)

{

cursor->DefineShape(CS_NORMAL, image, IntRect(0, 0, 12, 24), IntVector2(0, 0));

cursor->DefineShape("Custom", image, IntRect(12, 0, 12, 36), IntVector2(0, 0));

}

cursor->SetVisible(false);

ui->SetCursor(cursor);

}

There’s still a lot left to do however. If the plane goes out of the view the UI is drawn to the screen like normal. I have to come up with some way to disable the UI when the player is not looking. Since i plan on having most, if not all UI in world space, this won’t be too complicated. There are probably other issues to resolve as well which i haven’t encountered yet but this seems like a good enough start. It would’ve been really nice if there could’ve been multiple UI hierarchies though, it seems i’ll either have to modify urho’s source or i’ll have to figure out some other hack and simulate it by having other empty UI nodes attached to the root node and turn them on and off when i need.

Yeah, i have to basically use emacs everywhere after getting “emacs fingers”, no other text editor feels right, and i’m not even that good with emacs!  That being said, the consolas font looks even better in emacs for some reason. Windows is no fun at all but i have to use it because performance on linux is not that great on my craptop(Intel HD 4000)

That being said, the consolas font looks even better in emacs for some reason. Windows is no fun at all but i have to use it because performance on linux is not that great on my craptop(Intel HD 4000)

That being said, the consolas font looks even better in emacs for some reason. Windows is no fun at all but i have to use it because performance on linux is not that great on my craptop(Intel HD 4000)

That being said, the consolas font looks even better in emacs for some reason. Windows is no fun at all but i have to use it because performance on linux is not that great on my craptop(Intel HD 4000)