RayCast works on BillboardSet.

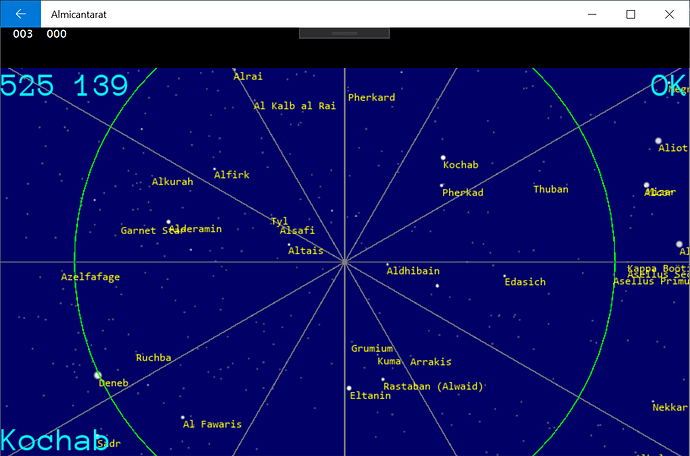

Continuing the discussion from Making an interactive star field:

But with UrhoSharp (C# urho3d wrapper for Xamarin) there is quite a bug due to the 40 pixels black banner on top of the screen.

So I needed to add correction to the cursor coordinates before I sent a RayCast:

(int x and int y are only there to send multiple RayCasts to have a chance to hit my smalls billboard intems when the user touch the screen…)

private uint? StarIndex(TouchEndEventArgs e, int x, int y)

{

{

//The correction to do because of the 40 pixels Black Banner on top of the screen

float ey = ((float)(e.Y + y) - 40f) * (float)Graphics.Height / ((float)Graphics.Height - 40f) ;

Ray cameraRay = camera.GetScreenRay((float)(e.X + x) / Graphics.Width, (float)ey / Graphics.Height);

RayQueryResult? result = octree.RaycastSingle(cameraRay, RayQueryLevel.Triangle, 100, DrawableFlags.Geometry);

if (result != null)

{

return result.Value.SubObject;

}

}

return null;

}

This code works with Xamarin.Forms for Android and UWP.