TL;DR:

I notice there is format_ member for Texture class. This should be the value we set like GL_RGB texture format for OpenGL.

Since format_ is just unsigned, I was wondering the mapping relation between the integer and different OpenGL texture format GL_XXXX. I searched the Urho3D source code but could not find anything.

I only found it’s only been set in

bool Texture2D::SetSize(int width, int height, unsigned format, TextureUsage usage, int multiSample, bool autoResolve)

functions.

I noticed the opengl texture function returns color as float [0.0, 1.0]. I wonder if there is any format_ setting that I could directly use the raw 8 bit data, e.g. integer [0, 255].

=====================================================

Background

I am using the default texture unit 0 for my heightmap terrain texture.

When I generate the texture image, I simply do:

const float height = GetHeightFromSource(x, y);

const int16_t height_16bit = static_cast<int16_t>(height);

const unsigned char r = height_16bit >> 8;

const unsigned char g = height_16bit & 0xFF;

Notice I have negative values in my heightmap. This is not a problem since we can deal with it when decode the texture. And I verified it works as expected.

Height height = (R << 8) + G;

Now when I use this texture image in opengl vertex shader for heightmap displacement.

I noticed the opengl texture function returns color as float [0.0, 1.0]. That’s fine since we can always map back to integer [0, 255].

I can either simply do

int height = int(r * 255) * 256 + int(g * 255);

or as someone on stackoverflow mentioned:

int r = int(floor(r >= 1.0 ? 255 : r * 256.0));

int g = int(floor(g >= 1.0 ? 255 : g * 256.0));

As for negative values, unfortunately we don’t have bit manipulation in shader but that’s not a problem.

I can do

vec2 heights = texture(sHeightMap0, texCoord).rg;

if (heights.r >= 0.5) {

return ((int(heights.r * 255) * 256 + int(heights.g * 255)) - 256 * 256) * scale;

} else {

return (heights.r * 256 + heights.g) * 256 * scale;

}

I thought this is correct.

For example, for height = -1, we encode into R = 1111 1111, G = 1111 1111.

So in shader we get: heights.r = 1.0, heights.g = 1.0.

Then we should get -1 * scale as the final result.

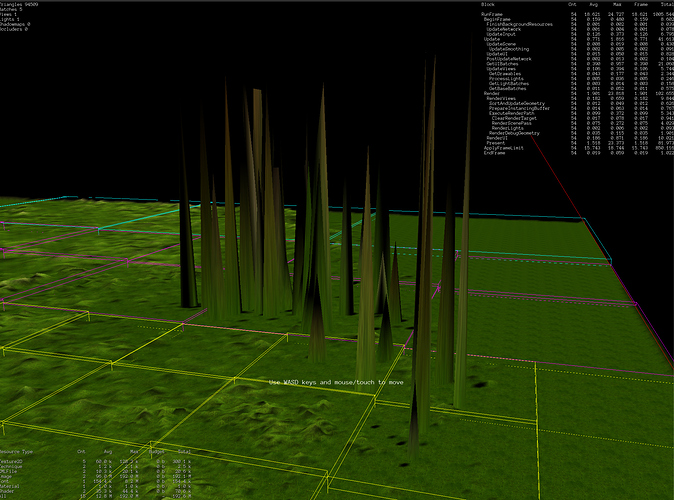

However, there are many sharp spikes in my terrain for those negative value regions.

I verified those height raw values and calculated them using my formula, everything should be correct.

This does not make any sense to me unless I’ve made some stupid mistakes somewhere?