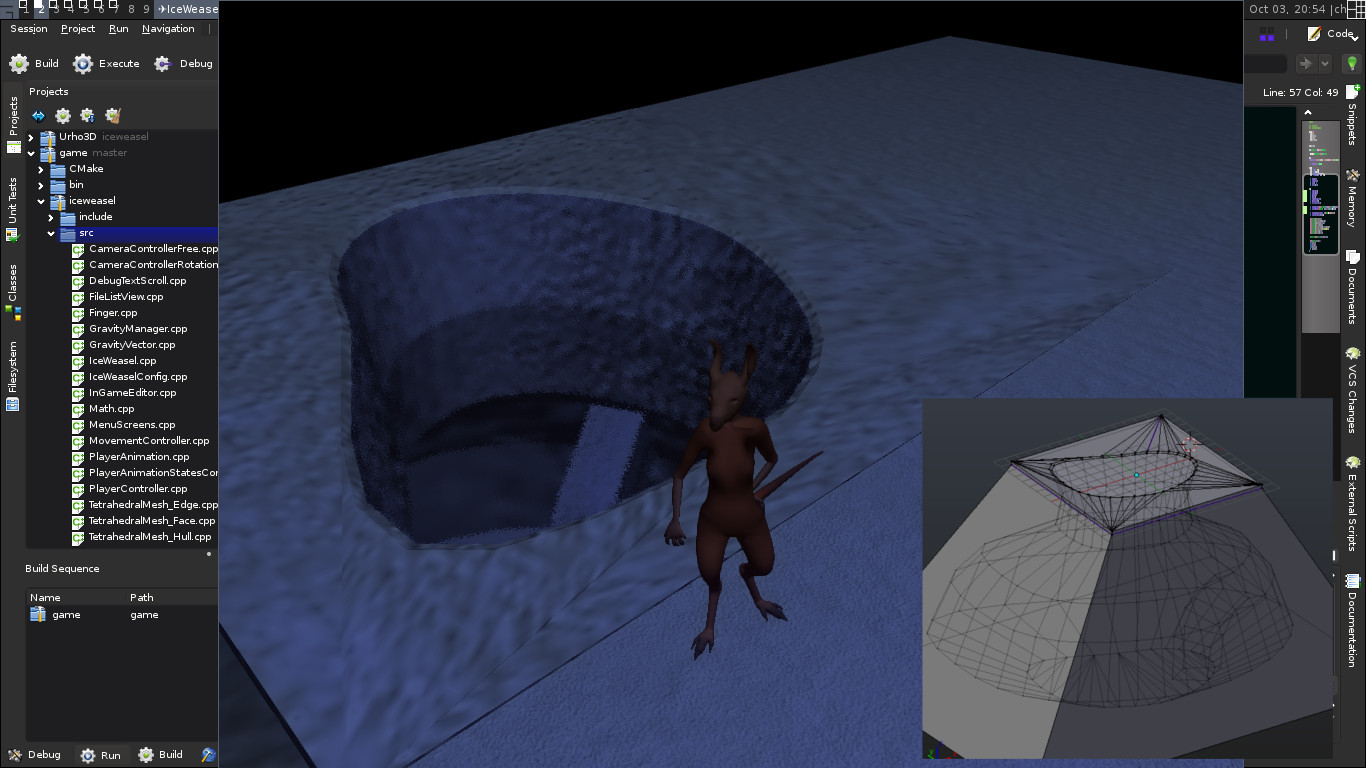

I finally feel comfortable enough using Urho3D to begin working on a game with a team. We’ve envisioned a multiplayer first person shooter with a kind of cyber-punk, perhaps “surreal” atmosphere at times.

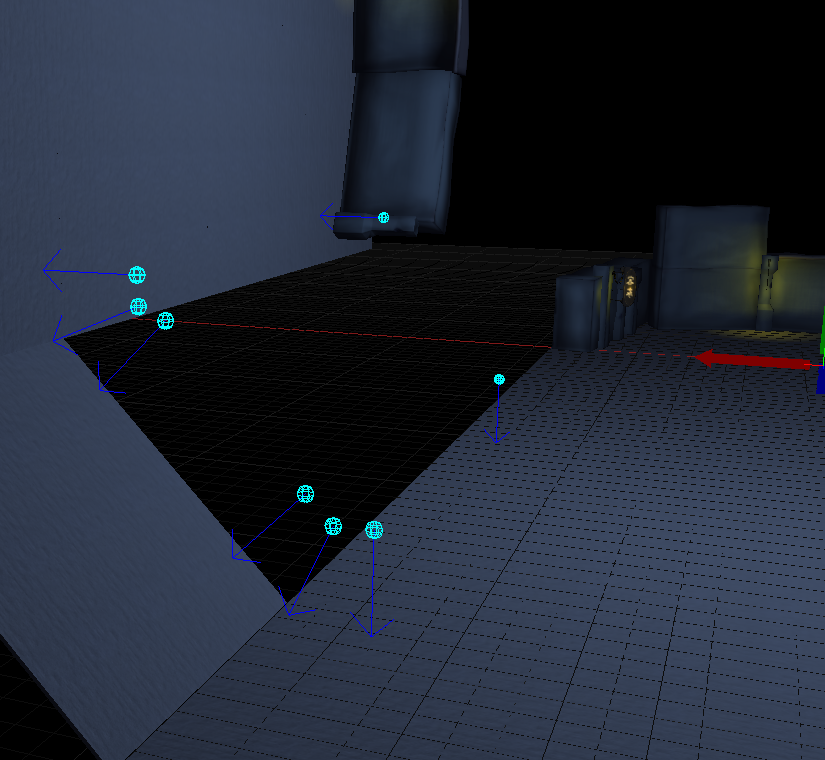

One unique feature of this game will be “localized gravity”. I’ve modded the editor to allow placement of “gravity probes”, which can be seen in the following screen shot as blue arrows:

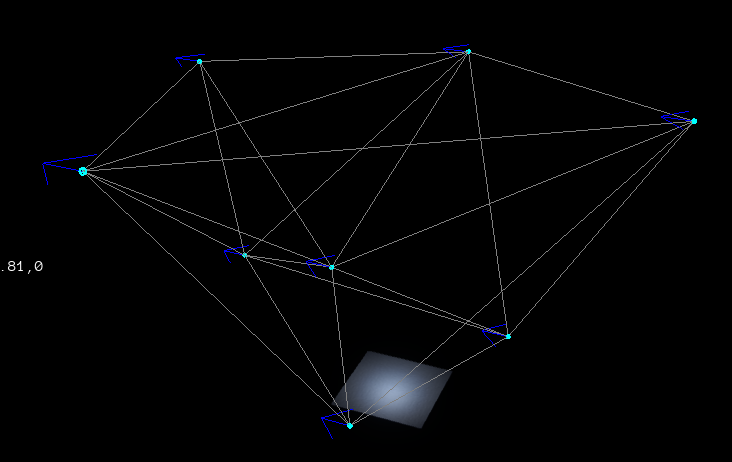

Each probe defines a directional vector and a multiplier. The scene can be queried for gravitational forces at any location in 3D space. The system is very rough right now (I search for the closest probe and just directly use its direction and force, so transitions are very jagged), but I was thinking about building a tetrahedral mesh out of the probes and use some form of spacial partitioning to decrease lookup time. That way you can query in which tetrahedron you’re in and interpolate the gravity parameters between its 4 vertices, and transitioning between probes would be smooth and unnoticeable.

With this system it will be possible to create things a l? M.C. Escher, but most likely we’ll be going for something less drastic and less confusing. Having half of the map at a 90? angle could open some interesting tactics for snipers, as they’d be able to shoot across half of the map.

Here is a video: