Overview

Since Sonoid finished working on PBR he passed the torch to me and provided me rights to change and redistribute the code as i feel fit. Since i took on the project i made some small changes, the first change i made was to allow PBR to work alongside the legacy renderer in Forward Rendering. The second change i made was to change the diffuse model to be Burley because i personally prefer it over Lambert.

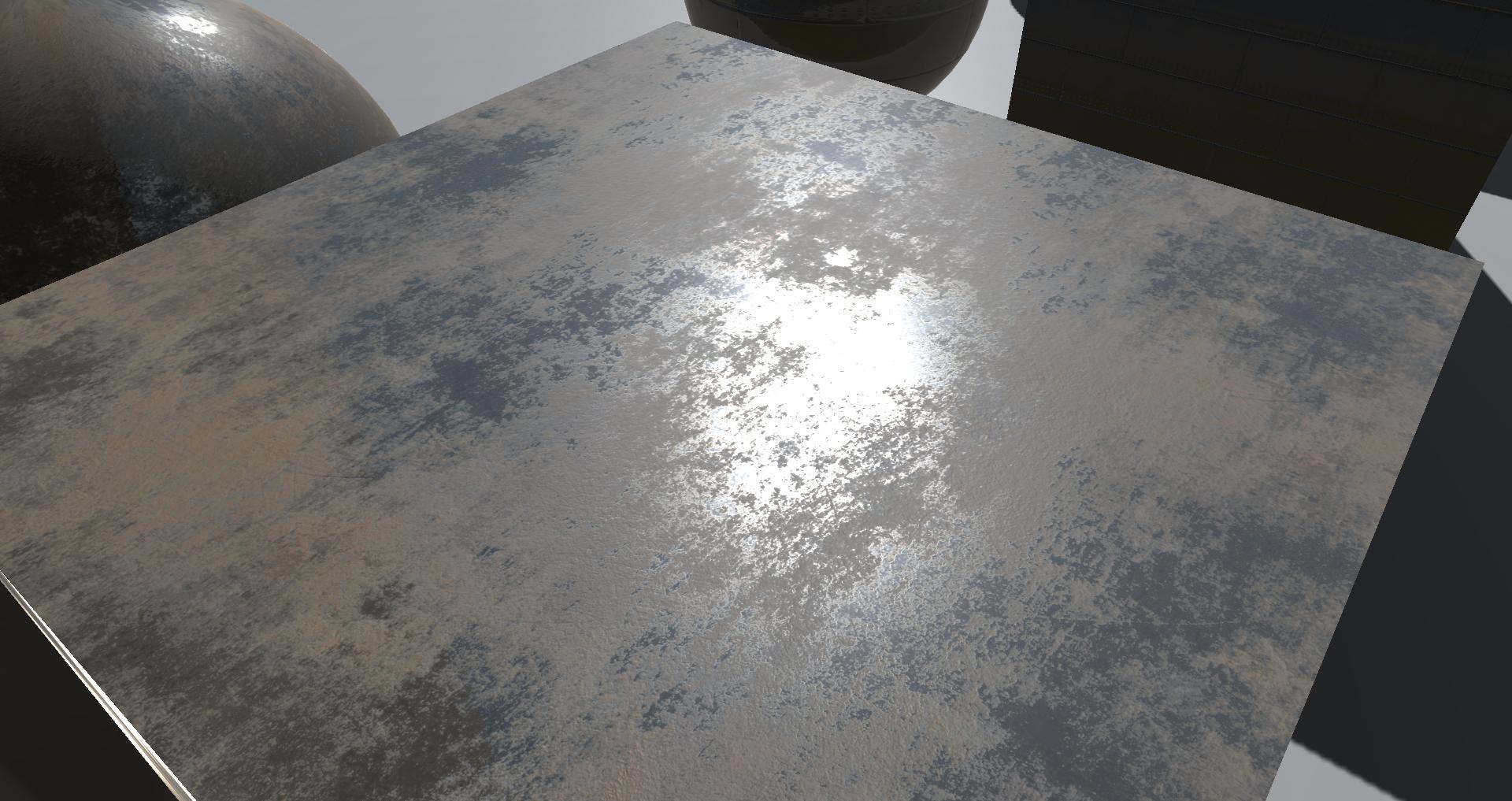

Results:

[video]https://youtu.be/6qfIHsG_MSU[/video]

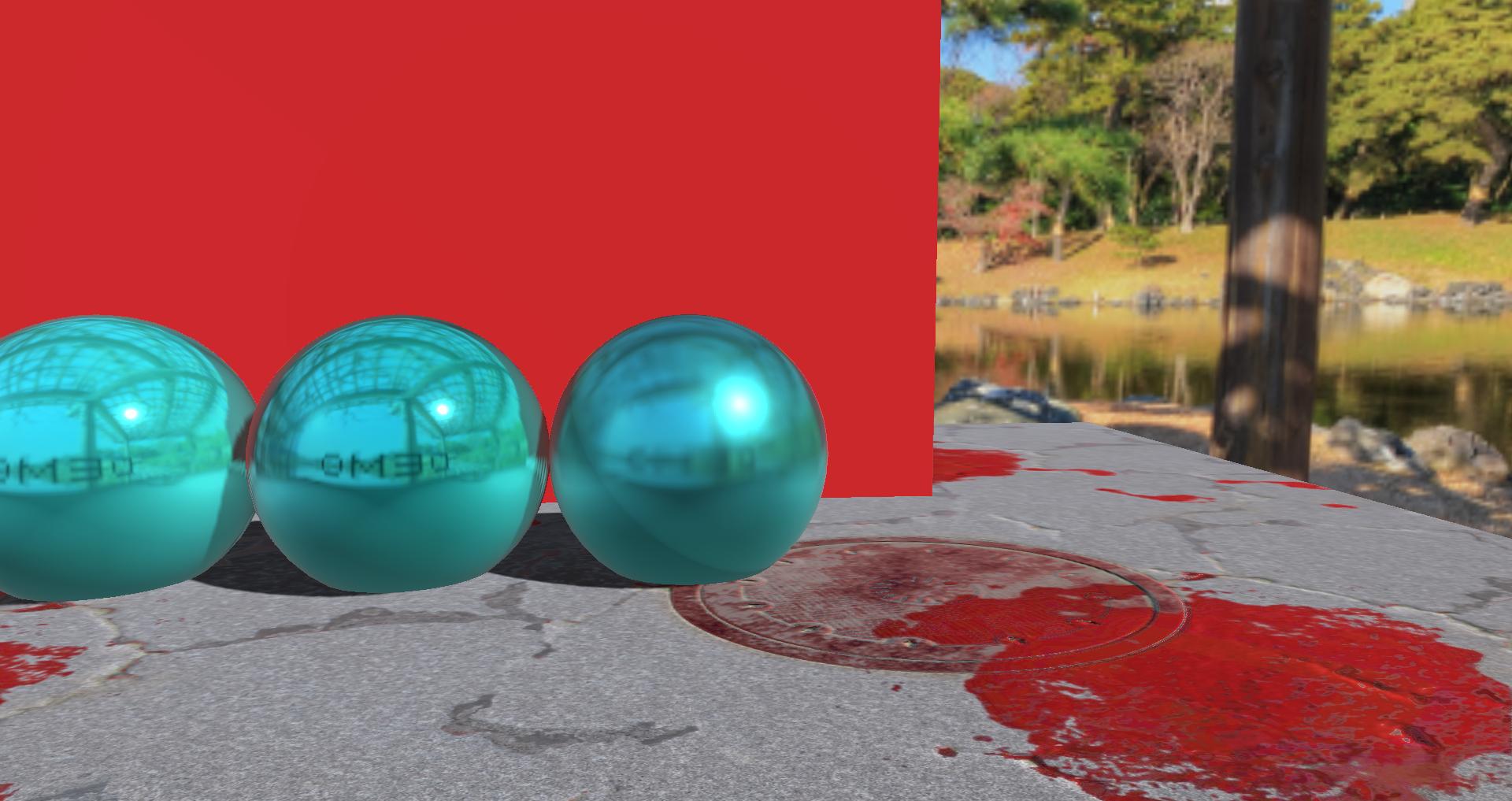

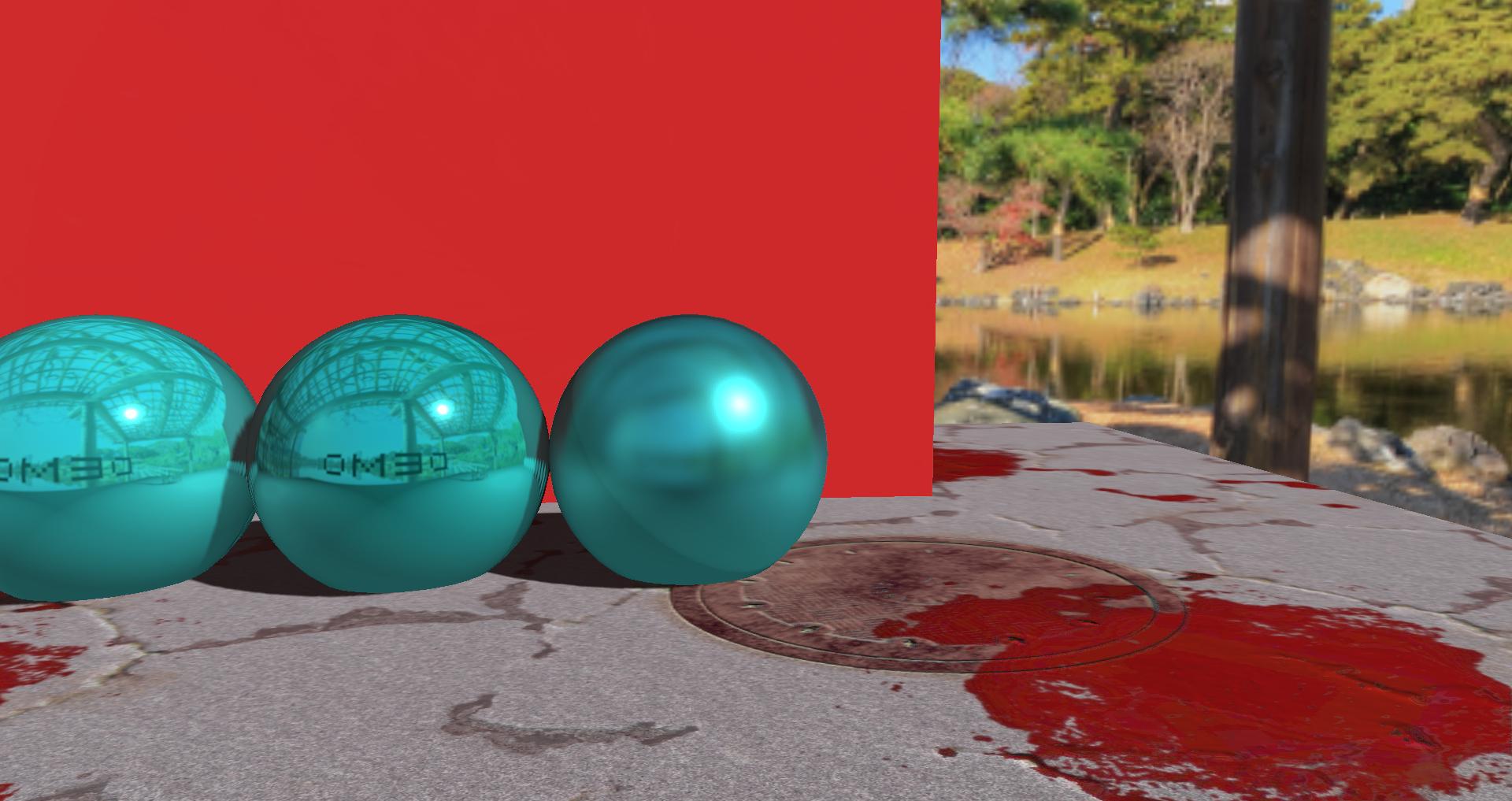

Images:

How to use

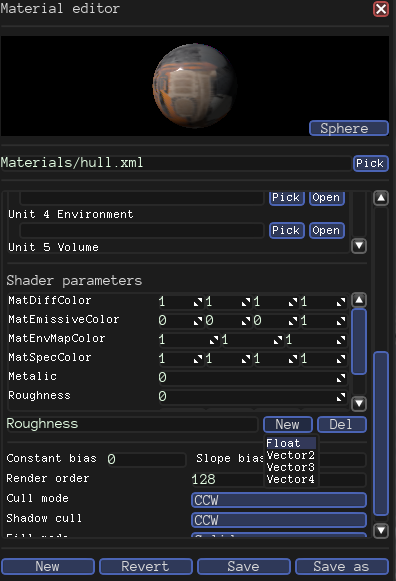

When you create a material you will need to use one of the PBR render techniques located in CoreData/Techniques/PBR

If the selected technique uses diffuse then you will need to input a albedo texture into the diffuse channel. Albedo is similar to diffuse although it does not contain any lighting data.

if the selected technique uses normal then you will need to input a traditional normal map into the normal channel.

if the selected technique uses Metallic /roughness then you will need to input a PBR properties map into the specular channel. The PBR properties map contains Roughness in the red color channel and Metallic in the green color channel.

if the selected technique uses emissive then you will need to input a emissive texture into the emissive channel.

For additional control over the material you can add Roughness and Metallic as material parameters, from these values you can adjust the PBR properties map or set default values for material without a PBR properties map

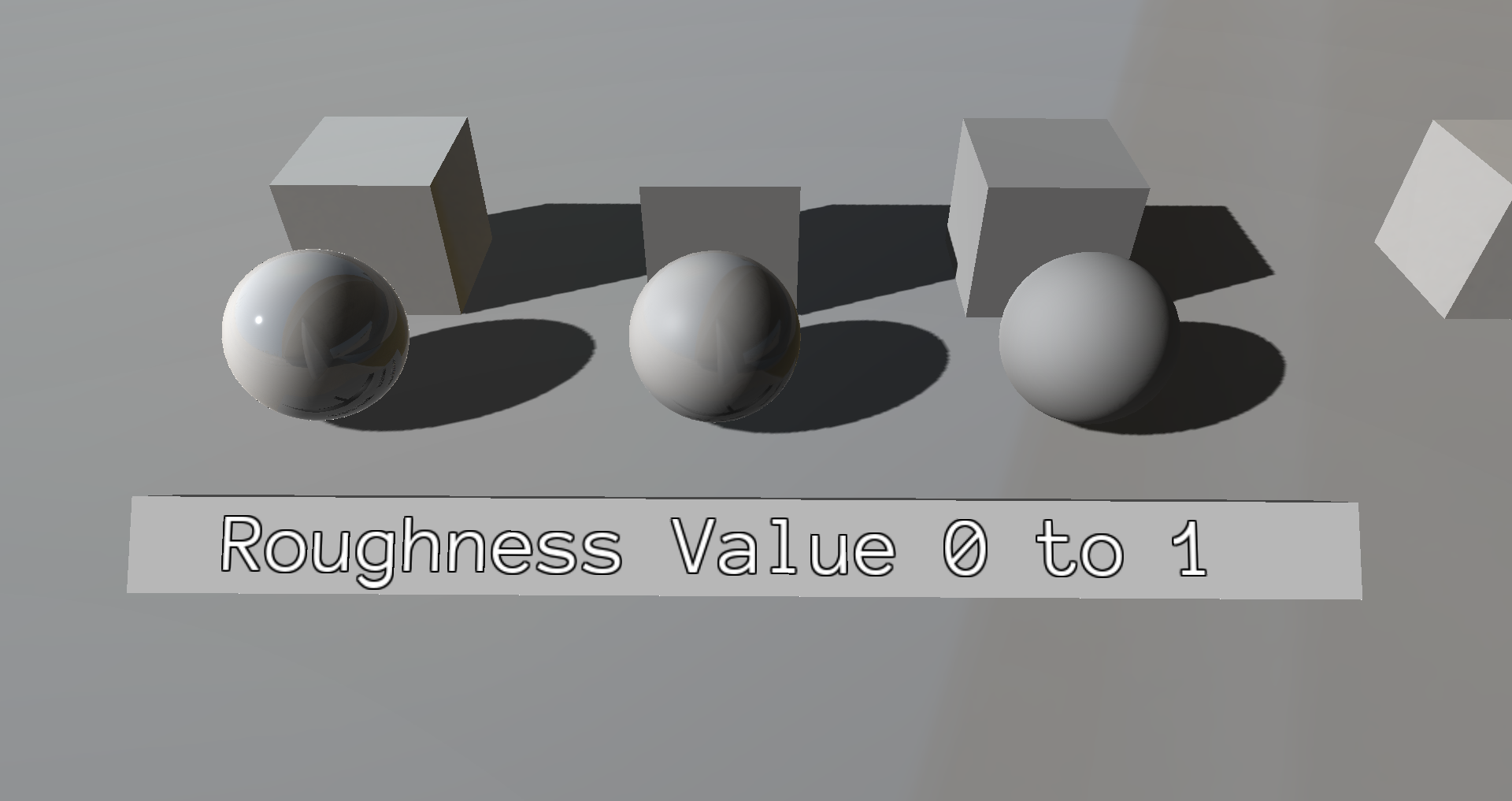

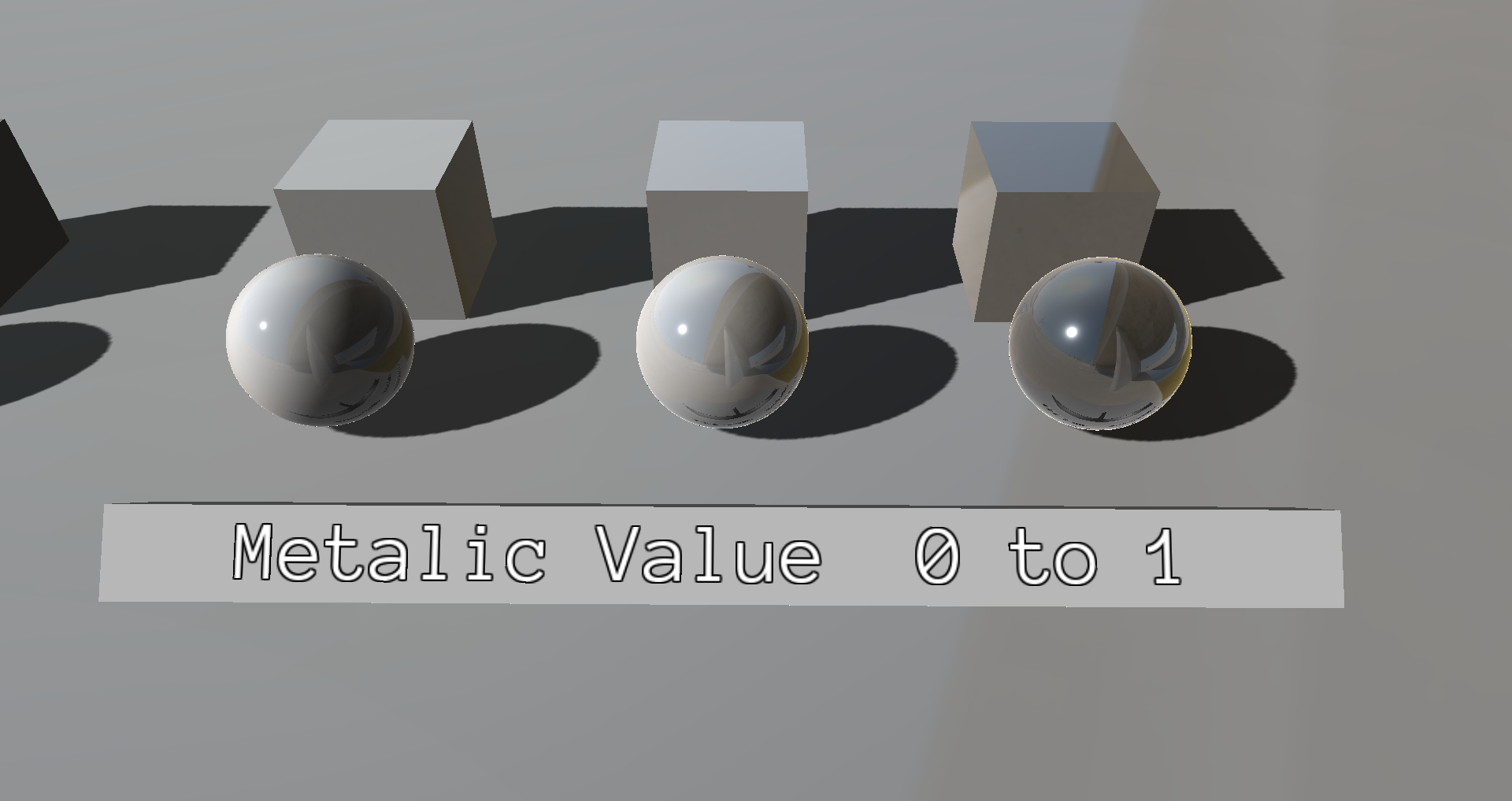

Effect

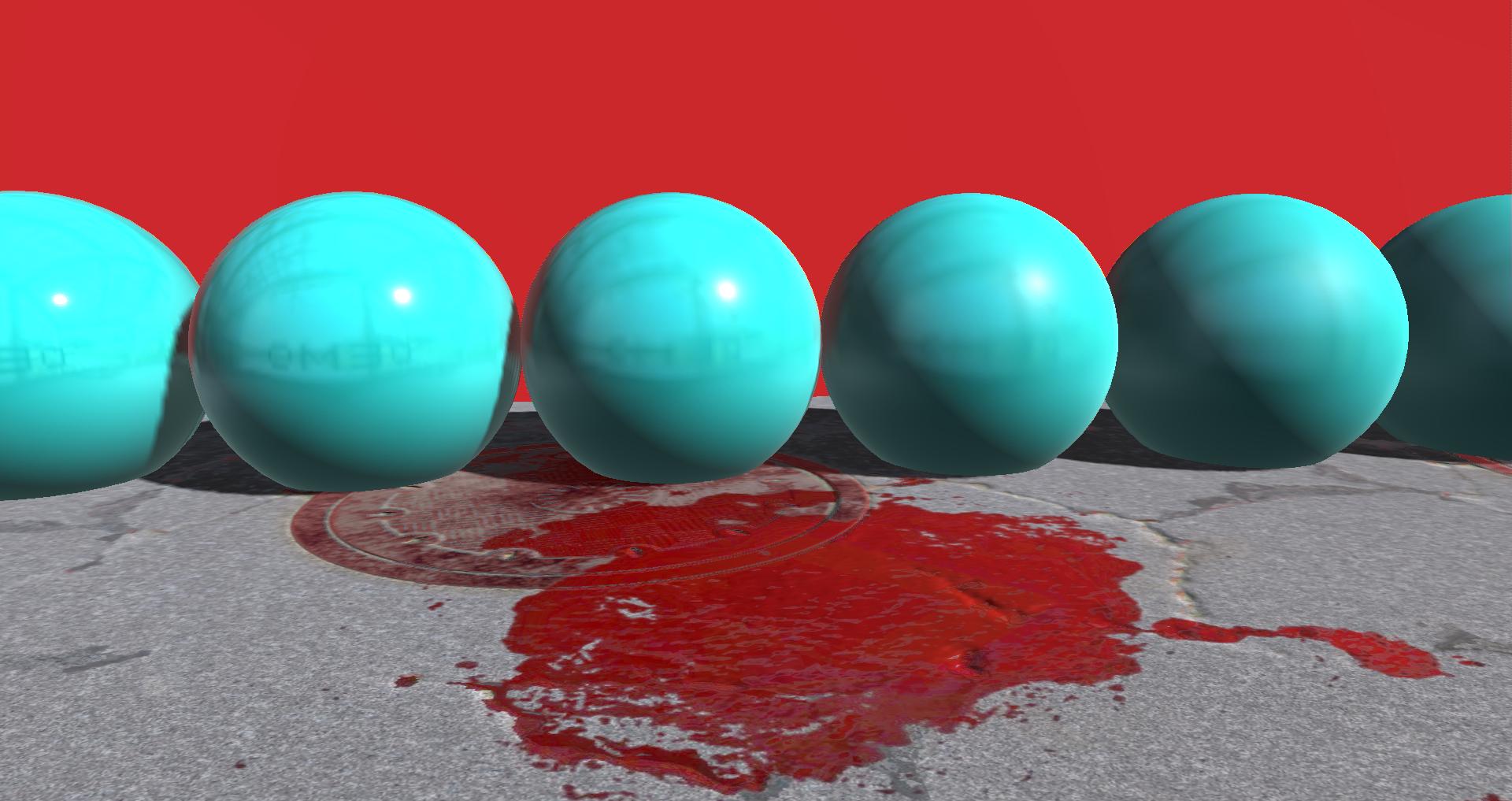

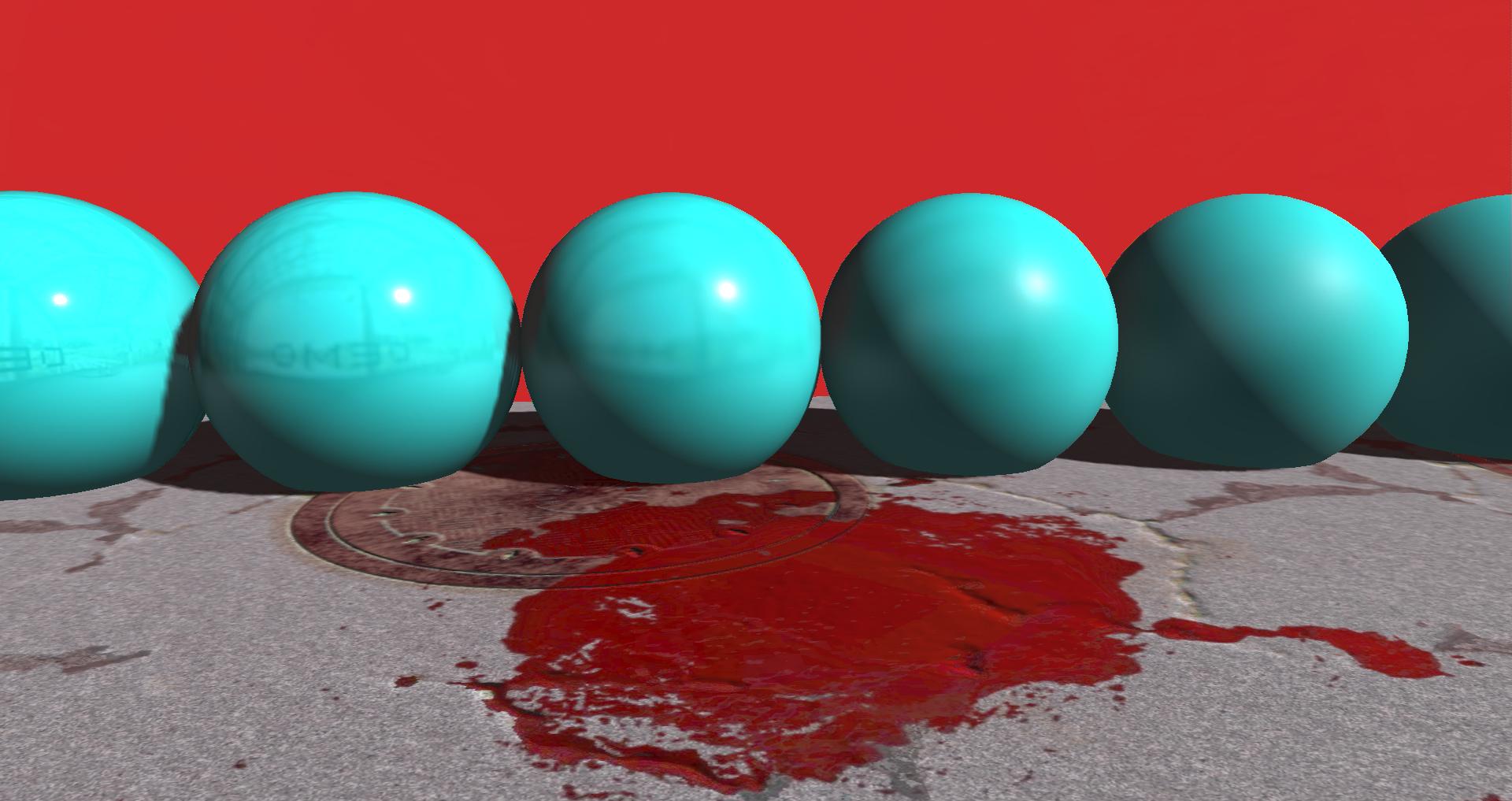

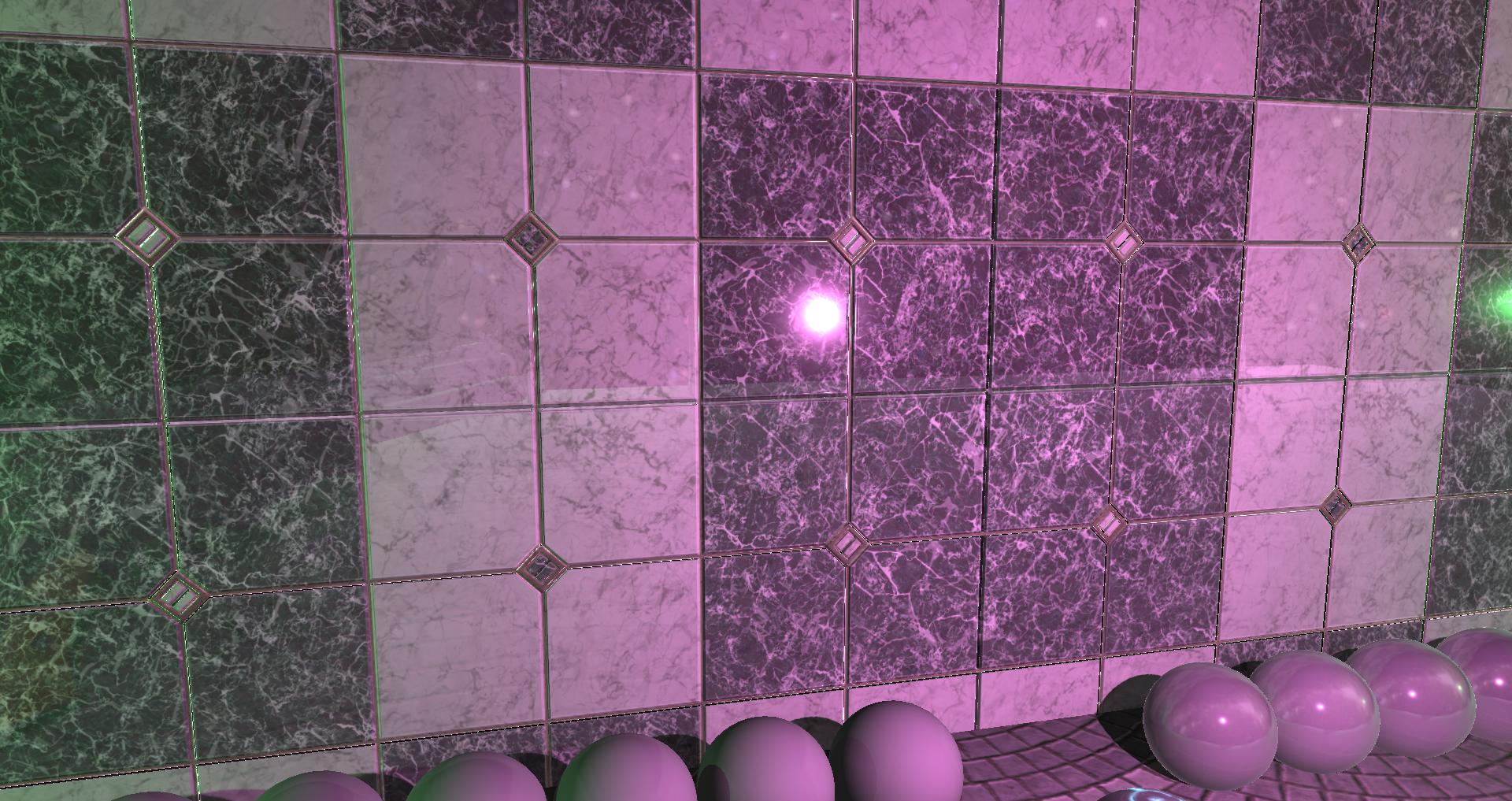

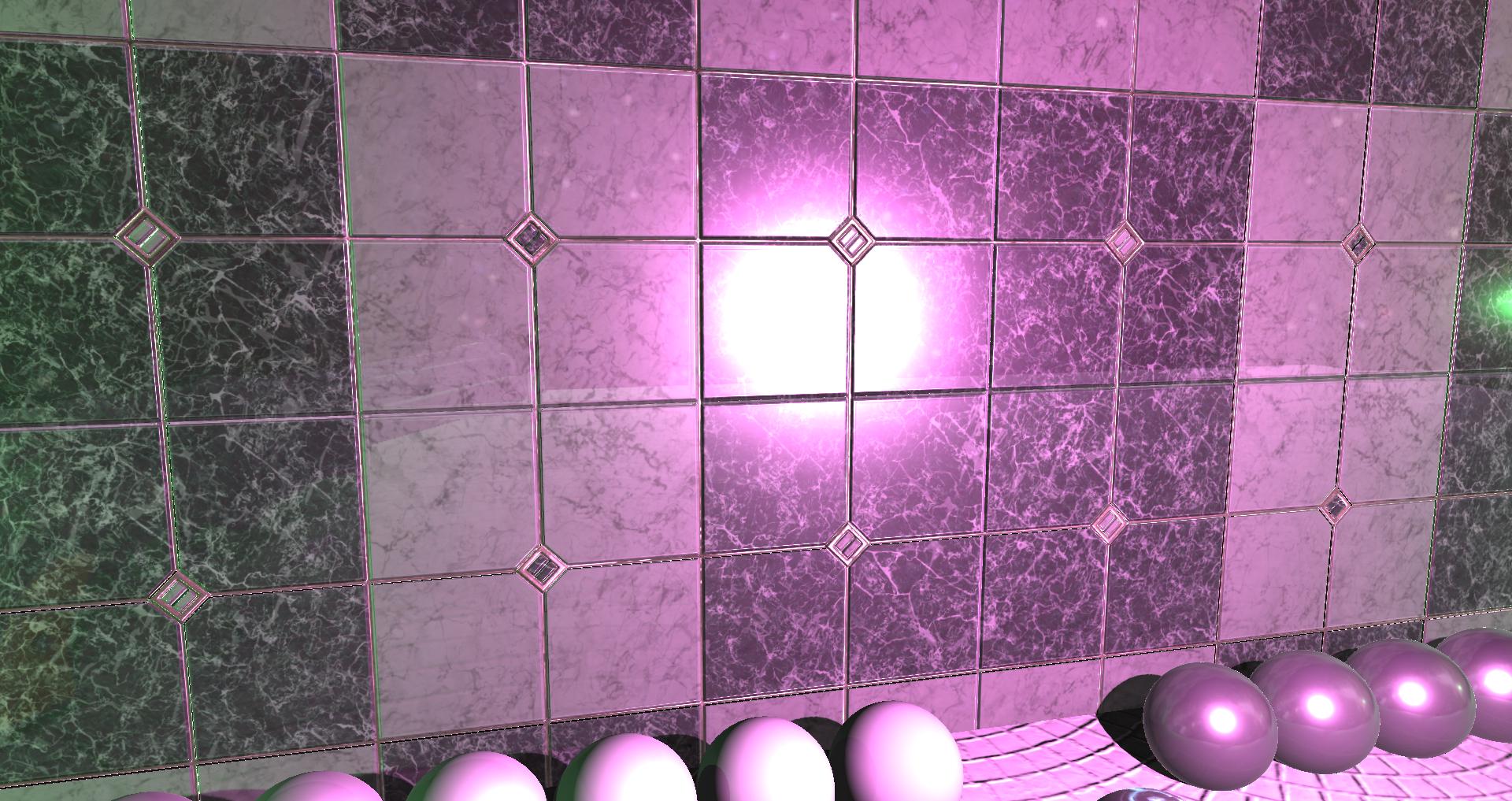

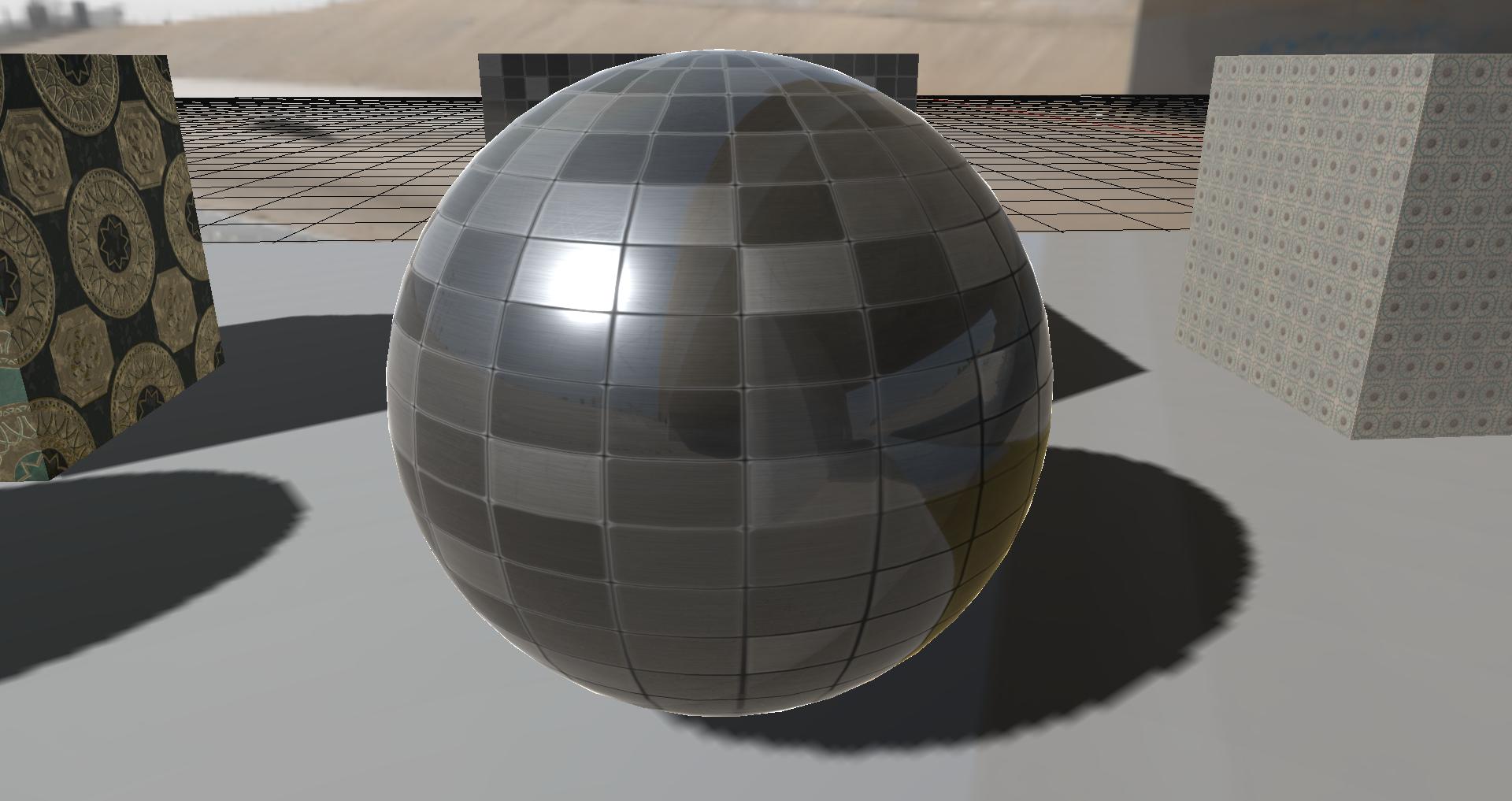

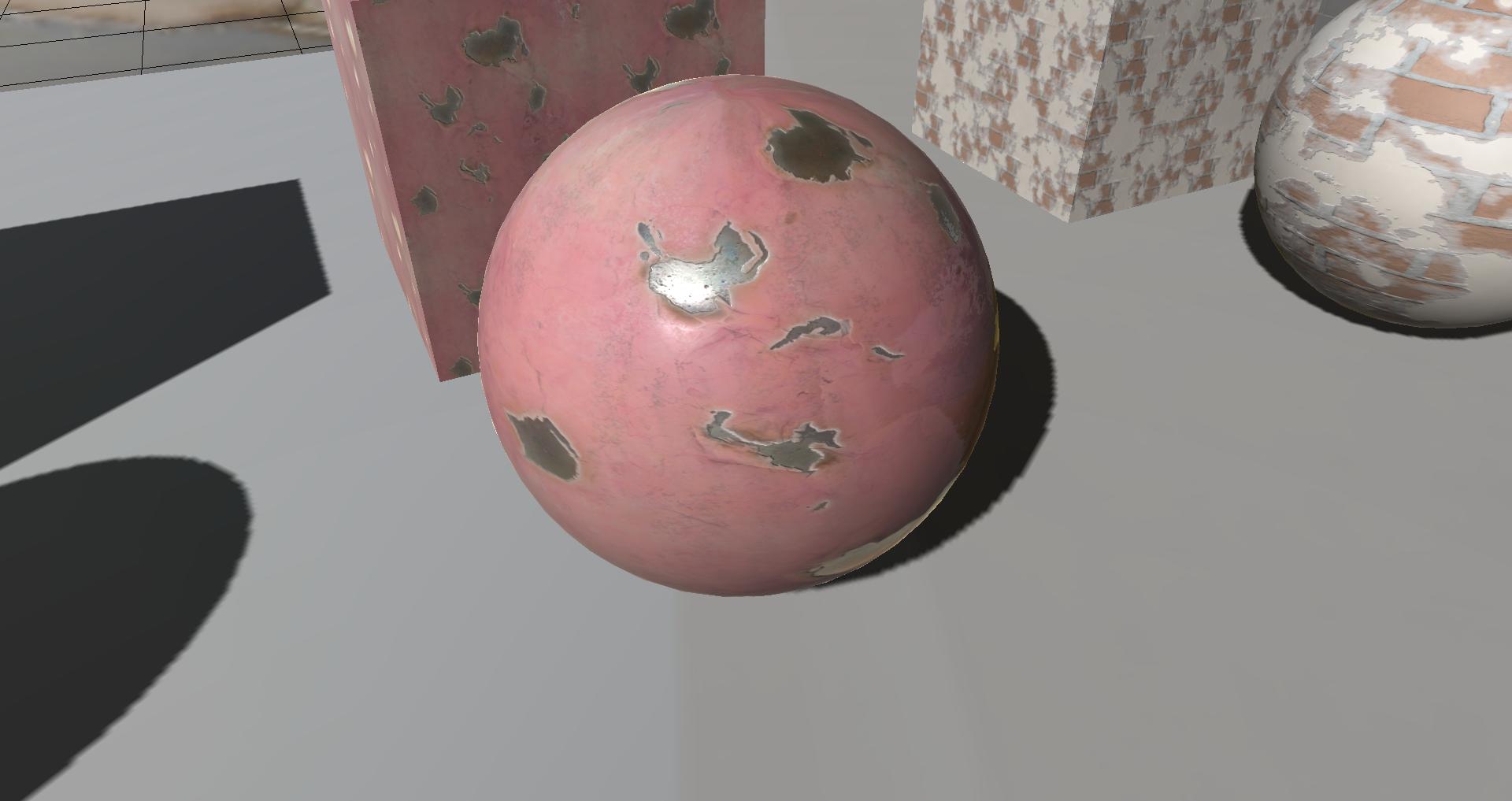

Both the Roughness and Metallic values affect the overall look of the material. The effect each value has on the material is shown in the images below, these images where taken from the Material Test scene included in PBR repository.

Roughneses:

Metalic:

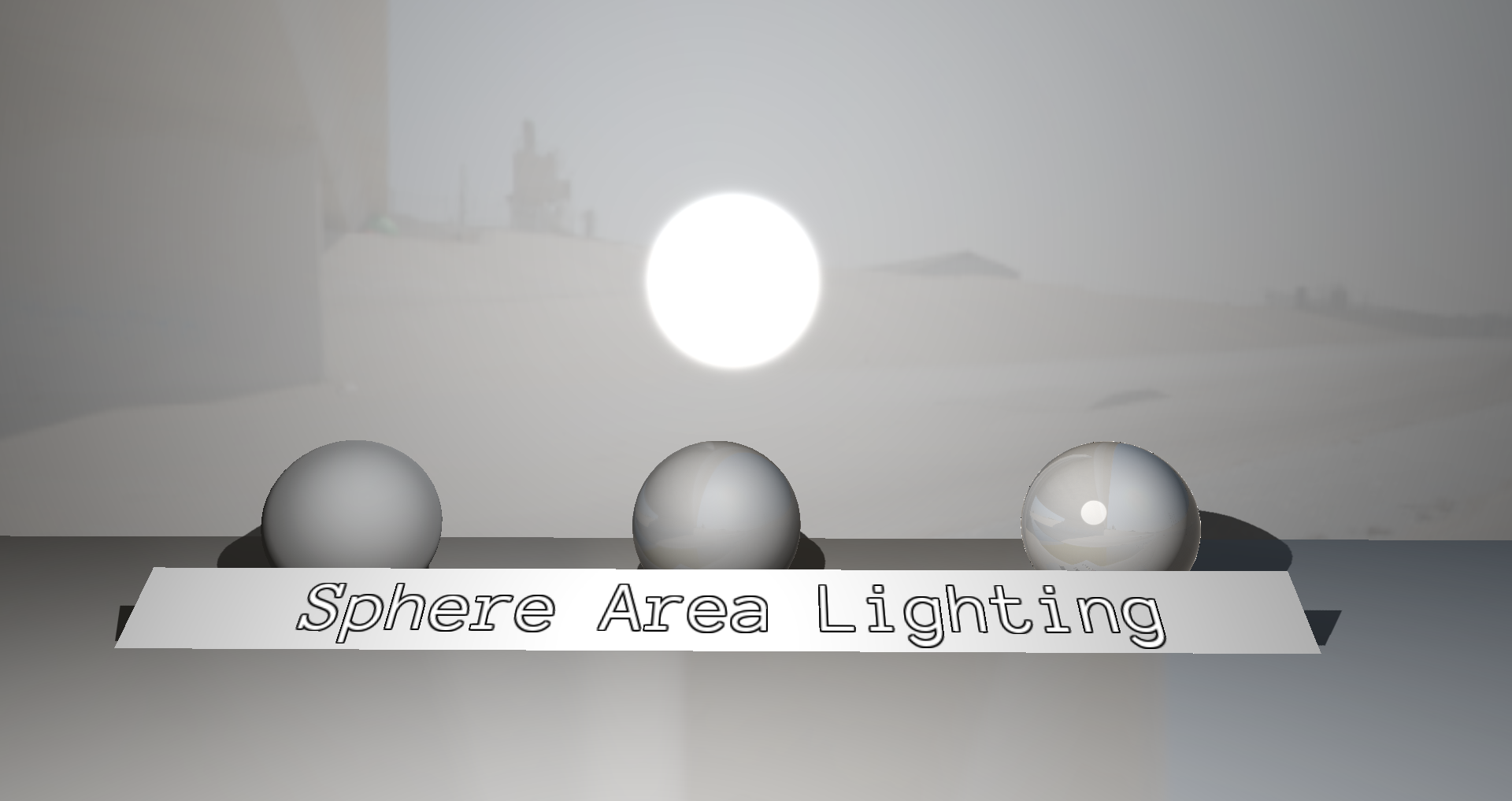

Area Lighting is still in development although sphere lights are currently supported. The image below demonstrates sphere lights as demonstrated on the Material Test scene.

Sphere Light

Download

Currently the download will not work on OpenGL Deferred Renderers due to know issue (without a fix currently)

https://github.com/dragonCASTjosh/Urho3D

. Using Urho3D license makes it easier for us to pull the good bits over into upstream Urho3D project as there should be no copyright issue then in copying the code over pro-actively. [/li][/ul]

. Using Urho3D license makes it easier for us to pull the good bits over into upstream Urho3D project as there should be no copyright issue then in copying the code over pro-actively. [/li][/ul]

. Using Urho3D license makes it easier for us to pull the good bits over into upstream Urho3D project as there should be no copyright issue then in copying the code over pro-actively. [/li][/ul][/quote]

. Using Urho3D license makes it easier for us to pull the good bits over into upstream Urho3D project as there should be no copyright issue then in copying the code over pro-actively. [/li][/ul][/quote]