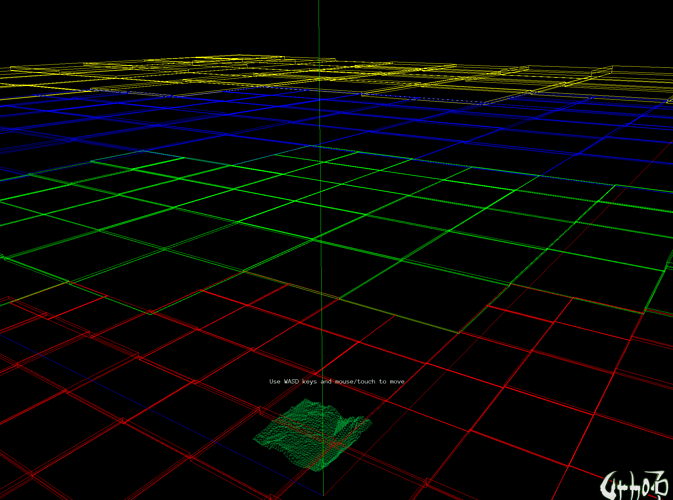

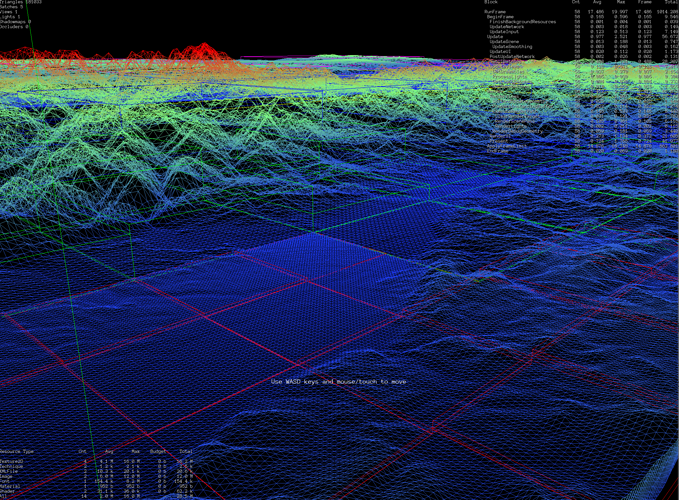

I’ve been trying to implement CDLOD Terrain Algorithm in Urho3D engine.

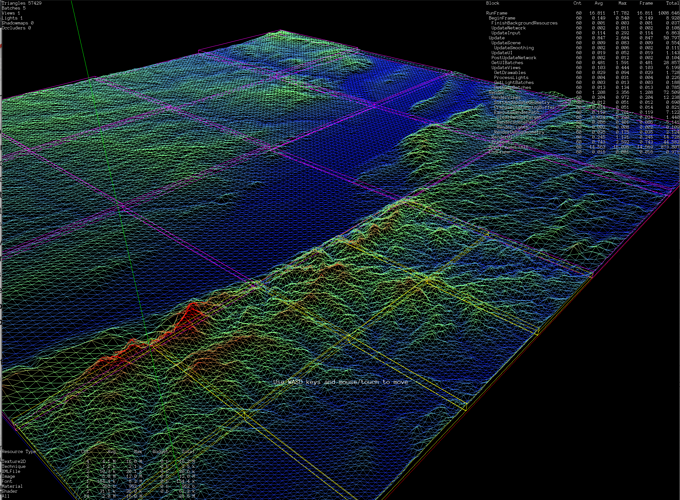

I finished the QuadTree and LOD node selection part, and it works as expected.

I created a single grid mesh of fixed dimensions and set it into vertex buffer.

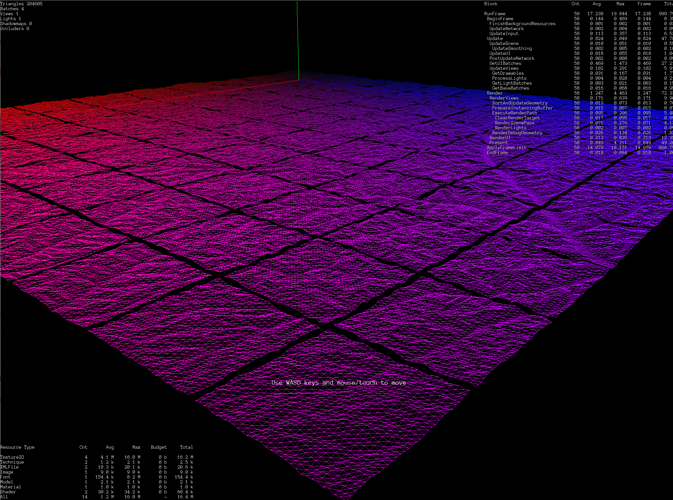

The next step is to “duplicate” this grid mesh vertices in vertex shader, scale and translate it to fill all the square areas.

Now I have some difficulties in understanding how to do that in Urho3D.

I guess I should use Instancing for such task. For example, I can add scale and translation variables in vertex shader.

uniform vec3 translation;

uniform float scale;

...

// then in main()

WorldPosition = scale*position + translation;

float height = getHeight(WorldPosition.xz);

...

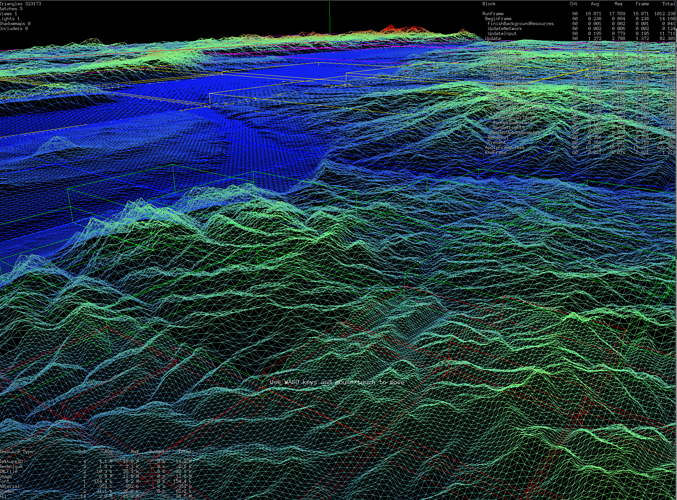

Then set those two variables differently for each of the square terrain area (as each separate instance).

But I am not sure how to implement it in Urho3D framework. For example, I noticed there is Graphics::DrawInstanced() method using the instancing technique I mentioned, and that function is called by BatchGroup::Draw(). I also noticed there is instancingData_ for Batch class. But I don’t see examples using similar things.

BTW: I checked Samples and found 20_HugeObjectCount is using StaticModelGroup and clone lots of static model for similar things. But I guess that’s probably not what I want.

Any help or sample code I could follow?

.

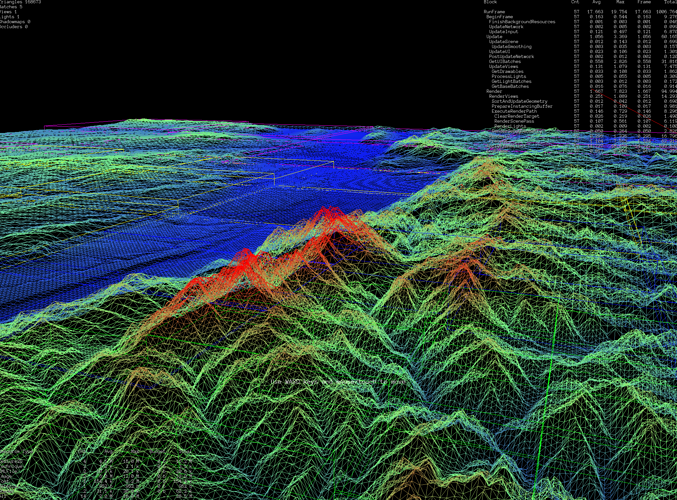

. to discover that instancing is not the silver bullet solution to all my rendering problems as I naively thought, and in fact, it only improves performance in only some (rather rare) circumstance and in the wrong circumstance it can actually make rendering slower.

to discover that instancing is not the silver bullet solution to all my rendering problems as I naively thought, and in fact, it only improves performance in only some (rather rare) circumstance and in the wrong circumstance it can actually make rendering slower.