Last question first, since the code is fairly long: depending on the scale of your game (you are going for a very large scale, right?) you may want to just not bother with dynamic spawning. If you are reasonably sure that it would be an issue, it depends on how you set up your map. I set up my maps with a bunch of ‘chunks’ that are each a node with all of the stuff in that chunk parented to it (the static stuff – player is just parented to scene). In that case, since my chunks are disabled and enabled, I would just have the chunk handle spawning the triggers, or, if you have a fairly static scene layout, you could just have them loaded already and enable/disable the trigger node (this is what I do, but I think you are going for a larger scale than I am and that could require different solutions). If your map is not divided this way, you may be able to use the Octree to accomplish the zoning, provided you aren’t going for a particularly map-aware zoning (i.e. if the zones don’t have to correspond to, say, a room or an alley). If you do want the map-aware zoning, but having the collision stuff spawned and just disabled is a problem, you can instead just mark your map with some empty nodes that have either the Trigger component loaded and let it spawn the collision stuff as needed later, storing the parameters needed in the node’s user variables (or the trigger’s attributes). If this to is too burdensome, you will probably have to create a subsystem for it that stores all of the triggers in some structure efficient for querying based off of proximity to a location (i.e. the camera’s), and then you instantiate the first 50 or so of them every second or so, and perhaps give them all a timer so they remove themselves after 15 seconds or whatever if they are not re-instantiated (to be clear, I have very little idea of what you would need for this, so I would test the other solutions first, as I have no real idea if this is a good answer).

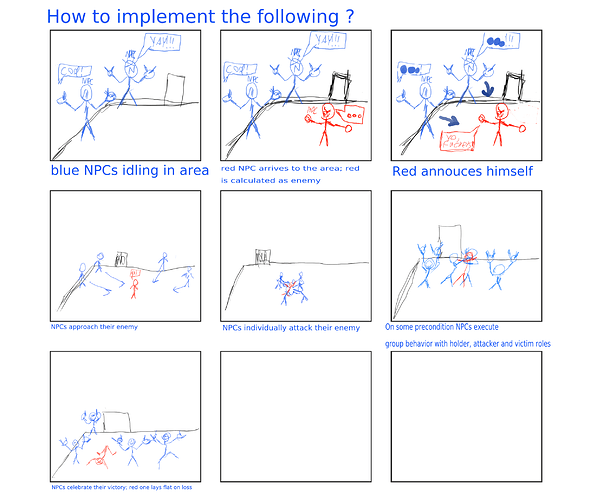

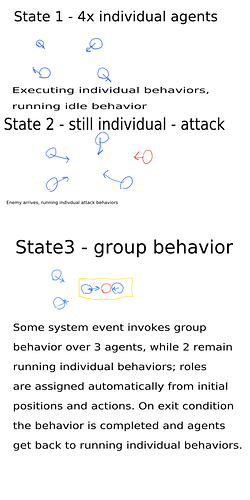

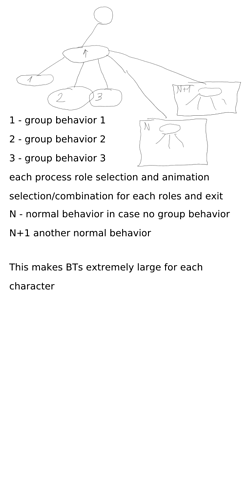

And here’s how I set up triggers for battles in my game. You would probably also need to subscribe to E_NODECOLLISIONEND, and then remove the agents that leave the region and possibly also those that end up assigned to a group action (if the group action takes it out of the region that is fine, as the GroupManager would still store them)

#pragma once

#include <Urho3D.h>

#include <Scene/Component.h>

#include <Core/Object.h>

#include <Core/Context.h>

#include "../CollisionMasks.hpp"

#include "../Character.hpp"

#include <Physics/PhysicsEvents.h>

using namespace Urho3D;

#include "BattleEvents.hpp"

#include "BattleQueue.hpp"

#include <IO/Log.h>

class BattleTrigger: public Component

{

OBJECT(BattleTrigger, Component);

SharedPtr<BattleQueue> battle_;

BattleTrigger(Context* context): Component(context), battle_(

new BattleQueue(context, nullptr, 2/*number of participants*/))

{

}

static void RegisterBattleTrigger(Context* context)

{

context->RegisterFactory<BattleTrigger>();

}

/// Handle scene node being assigned at creation.

virtual void OnNodeSet(Node* node)

{

if (node == 0)

return;

//todo: change to GetComponents?

RigidBody* body = node->GetComponent<RigidBody>();

if (body)

{

body->SetCollisionLayerAndMask(COLLISION_BATTLE, COLLISION_PERSON);

body->SetTrigger(true);

SubscribeToEvent(node, E_NODECOLLISIONSTART,

HANDLER(BattleTrigger, HandleCollision));

}

}

void HandleCollision(StringHash eventType, VariantMap& eventData)

{

if (!enabled_)

return;

using namespace NodeCollisionStart;

Node*other = (Node*)eventData[P_OTHERNODE].GetPtr();

if (other == nullptr)

return;

using namespace BattleTriggered;

eventData[BattleTriggered::P_BATTLE_QUEUE] = battle_;

eventData[P_TARGET] = other;

//The pretrigger is so that the character can 'capture' the trigger and display a dialogue.

// Skipping to the E_BATTLE_TRIGGERED is more like what you want.

// Or just storing the agents that enter and removing them when they leave,

// using this class as the 'manager' that decides when a GroupManager should be instantiated

node_->SendEvent(E_BATTLE_PRE_TRIGGERED, eventData);

if (eventData[BattlePreTriggered::P_CAPTURE].GetBool())

LOGDEBUG("Battle Trigger Captured.");

else

node_->SendEvent(E_BATTLE_TRIGGERED, eventData);

//In my game, the Player object receives this event and then has the queue start the battle

}

};